Steve Feldstein, Dara Massicot

{

"authors": [

"Steve Feldstein"

],

"type": "questionAnswer",

"centerAffiliationAll": "dc",

"centers": [

"Carnegie Endowment for International Peace",

"Carnegie Europe"

],

"collections": [

"Democracy and Governance",

"Artificial Intelligence"

],

"englishNewsletterAll": "",

"nonEnglishNewsletterAll": "",

"primaryCenter": "Carnegie Endowment for International Peace",

"programAffiliation": "DCG",

"programs": [

"Democracy, Conflict, and Governance",

"Technology and International Affairs"

],

"projects": [],

"regions": [

"Iran"

],

"topics": [

"Political Reform",

"Democracy",

"Technology"

]

}

Source: Getty

We Need to Get Smart About How Governments Use AI

China is exporting artificial intelligence (AI) technologies to other countries, particularly to autocratic-leaning states. Why are countries racing to embrace this new technology?

What do many people get wrong when they think of artificial intelligence?

One of the big misconceptions is that AI is a future technology, akin to humanoid robots that appear in television shows and movies. But AI is not a speculative technology—it already has many real-world applications, and ordinary people rely on it in one form or another every day.

AI already powers many common mobile apps and programs. In fact, iPhones, Amazon’s Alexa, Twitter and Facebook feeds, Google’s search engine, and Netflix movie queues—to name just a few examples—all rely on AI.

That said, there is a vast gap between the complexity of AI processing needed for the complex geospatial functions performed by self-driving cars versus, say, the basic AI algorithms used for more routine tasks like filtering spam emails.

How is AI being misused?

Technologist Melvin Kranzberg famously stated, “Technology is neither good nor bad; nor is it neutral.” AI technology follows this principle. It’s dual-use, meaning that it performs a range of functions, including both military and peaceful ones.

For example, AI is being used to spot pathogens in blood samples and to analyze MRI scans for cancer. These algorithms can work more quickly and accurately than their human counterparts, allowing for rapid monitoring and identification of malignant cells

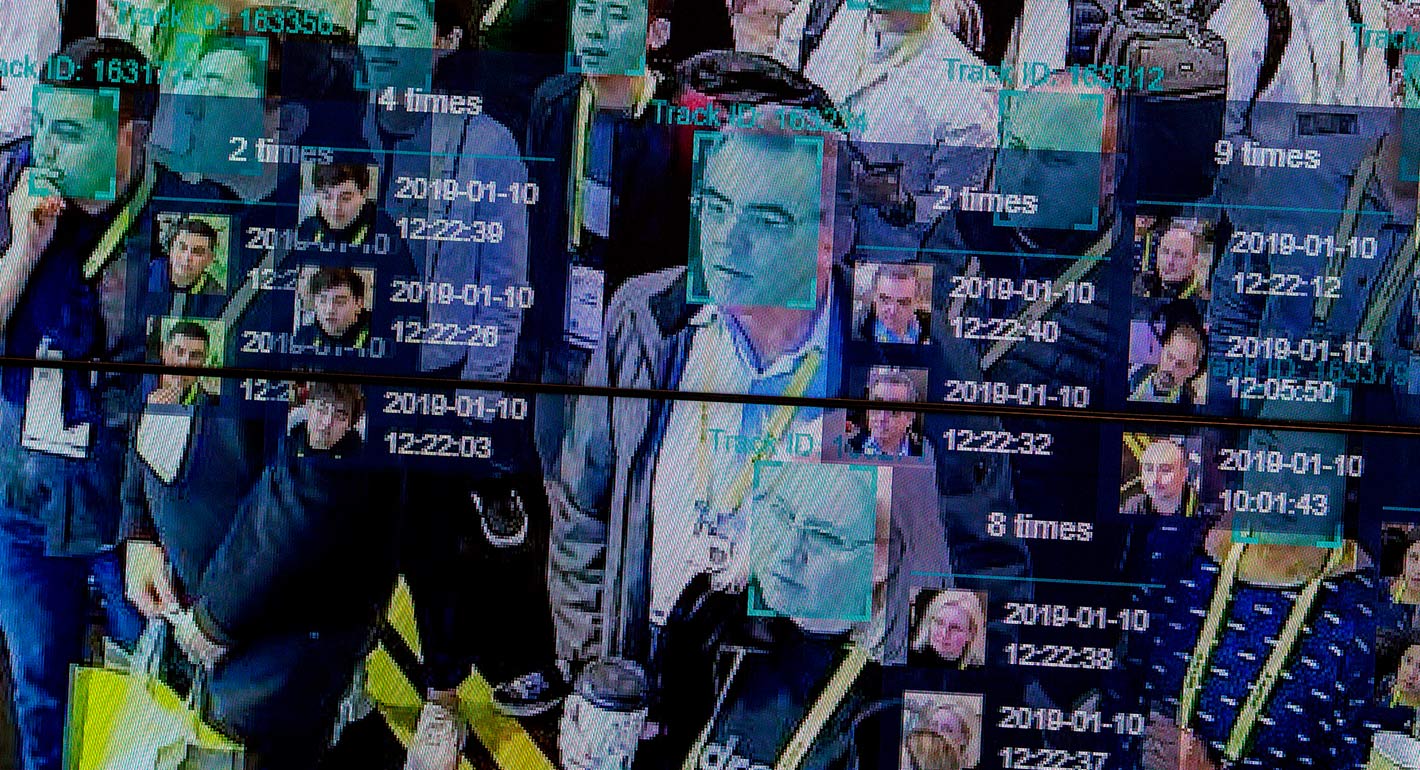

But AI can also be channeled maliciously and destructively. For one thing, AI’s surveillance capability offers startling new ways for authoritarian and illiberal states to monitor and control their citizens.

China has been one of the frontrunners in exploiting this technology for surveillance purposes. For example, in Xinjiang and Tibet, China is using AI-powered technology to combine multiple streams of information—including individual DNA samples, online chat history, social media posts, medical records, and bank account information—to observe every aspect of individuals’ lives.

But China is not just using AI to manage restive populations in far-flung provinces. Beijing is also rolling out what it calls “social credit scores” into mainstream society. These scores use big data derived from public records, private technology platforms, and a host of other sources to monitor, shape, and rate individuals’ behavior as part of a broader system of political control.

Second, AI can manipulate existing information in the public domain to rapidly spread disinformation. Social media platforms use content curation algorithms to drive users toward certain articles, in order to influence their behavior (and keep users addicted to their social media feeds). Authoritarian regimes can exploit such algorithms too. One way they can do so is by hiring bot and troll armies to push out pro-regime messaging.

Beyond that, AI can help identify key social-media influencers, whom the authorities can then coopt into spreading disinformation among their online followers. Emerging AI technology can also make it easier to push out automated, hyperpersonalized disinformation campaigns via social media—targeted at specific people or groups—much along the lines of Russian efforts to influence the 2016 U.S. election, or Saudi troll armies targeting dissidents such as recently murdered journalist Jamal Khashoggi.

Finally, AI technology is increasingly able to produce realistic video and audio forgeries, known as deep fakes. These have the potential to undermine our basic ability to judge truth from fiction. In a hard-fought election, for example, an incumbent could spread doctored videos falsely showing opponents making inflammatory remarks or engaging in vile acts.

Which countries are investing the most in AI?

Several countries are spending a lot of money to beef up their AI capabilities. For instance, French President Emmanuel Macron announced in March 2018 that France would invest $1.8 billion in its AI sector to compete with China and the United States. Likewise, Russian President Vladimir Putin has publicly stated that “whoever becomes the leader in this sphere [AI] will become the ruler of the world,” implying a hefty Russian investment in developing this technology as well. Other countries like South Korea have also made big AI investment pledges.

But the world leaders in AI are the United States and China. For Beijing, AI is an essential part of a broader system of domestic political control. China wants to be the world leader in AI by 2030, and has committed to spending $150 billion to achieve global dominance in the field.

How do China’s AI capabilities stack up against those of the United States?

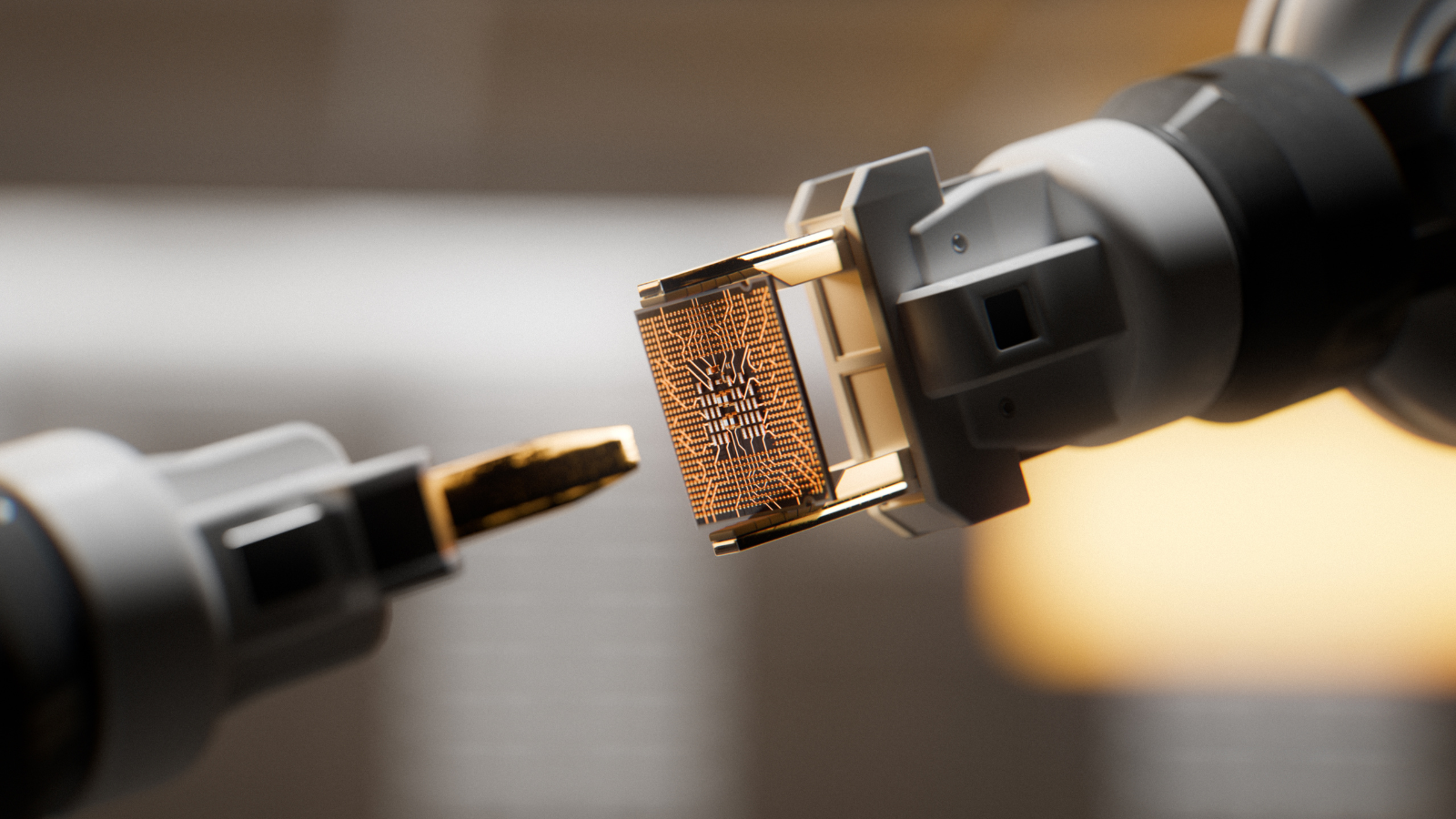

There are three central components of AI—training data for machine learning, strong algorithms, and computing power.

Of those three, China has training data in abundance and an improving repertoire of algorithms. But the country’s ability to manufacture advanced computer chips—and tap the computing power they supply—lags behind U.S. capabilities.

By contrast, the United States has the world’s most advanced microchips and most sophisticated algorithms. Yet Americans increasingly trail behind their Chinese counterparts when it comes to the sheer amount of digital data available to AI companies. This is because Chinese companies can access the data of over one billion domestic users with almost non-existent privacy controls. And data increasingly makes all the difference when it comes to building AI companies that can outperform competitors, the reason being that large datasets help algorithms produce increasingly accurate results and predictions.

What kind of AI is China exporting to other countries?

China sees technology as a way to achieve its grand strategic aims. Part of this strategy involves spreading AI technology to support authoritarianism overseas. The Chinese are aggressively trying to develop their own AI, which they can then vigorously peddle abroad.

As China develops its AI sector, it is promoting a digital silk road (as part of its Belt and Road Initiative, which involves Chinese investment in other countries’ infrastructure) to spread sophisticated technology to governments worldwide.

These efforts include plans to construct a network of “smart” or “safe” cities in countries such as Pakistan and Kenya. These cities have extensive monitoring technology built directly into their infrastructure.

In Latin America, China has sold AI and facial recognition software to Ecuadorian, Bolivian, and Peruvian authorities to enhance public surveillance.

Likewise, in Singapore, China is providing 110,000 surveillance cameras fitted with facial recognition technology. These cameras will be placed on all of Singapore’s public lampposts to perform crowd analytics and assist with anti-terrorism operations.

Similarly, China is supplying Zimbabwe with facial recognition technology for its state security services and is building a national image database.

Additionally, China has entered into a partnership with Malaysian police forces to equip officers with facial recognition technology. This would allow security officials to compare instantly live images captured by body-cameras with images stored in a central database.

Why does China want to export its AI overseas?

China shrewdly assumes that the more it shifts other countries’ political systems closer to its model of governance, the less of a threat those countries will pose to Chinese hegemony.

Beijing also knows that providing critical technology to eager governments will make them more dependent on China. The more that governments rely on advanced Chinese technology to control their populations, the more pressure they will feel to align with Beijing’s strategic interests.

In fact, China’s AI strategy is blunt about the technology’s perceived benefits. A press release from the Ministry of Industry and Information Technology states that AI “will become a new impetus for advancing supply-side structural reforms, a new opportunity for rejuvenating the real economy, and a new engine for building China into both a manufacturing and cyber superpower.”

What new developments in AI are coming next?

Scientists group AI applications into three categories of intelligence: weak (or narrow) artificial intelligence, artificial general intelligence, and artificial superintelligence.

At the moment, all existing AI technologies fall into the narrow category. Narrow AI systems perform tasks that need human intelligence, such as translating texts or recognizing certain images. At times, narrow AI might be better than humans at performing those specific assignments. But narrow AI machines can only do a limited range of tasks. For example, they couldn’t read a document and come up with new policy recommendations based on its original ideas.

One of the most important technical approaches in narrow AI is machine learning. This statistical process begins with a dataset and tries to derive a rule or procedure that can explain the data or predict future data.

Yet there are two big challenges associated with machine learning. First, even well-trained algorithms often falter. An image-classifying model may falsely categorize nonsensical images as a specifically defined object. AI technology can be tripped up when trying to recognize closely related images as well. For example, even if an AI algorithm has viewed thousands of images of house cats, it can’t recognize pictures of ocelots.

Second, there is the problem of opacity. As algorithmic models become more mathematically complex, it is increasingly difficult to follow the logic of how a machine reached a certain conclusion. This is known as the black box problem: data scientists can see what information goes into the system and what comes out, but they can’t see its internal workings.

Systems powered by artificial general intelligence, on the other hand, refer to machines that are, as Tim Urban has written, “as smart as a human across the board—a machine that can perform any intellectual task that a human being can.” Unsurprisingly, this is a much more complicated endeavor. Scientists have yet to create such a machine. Some optimists predict that artificial general intelligence may first emerge around 2030, when scientists can finally reverse-engineer the human brain for computer simulations. But many scientists are much more skeptical that the industry is anywhere close to achieving artificial general intelligence.

Artificial superintelligence represents a final category, in which computers will vastly exceed the capabilities of humans in all intellectual dimensions. At present, such technology remains an abstraction.

What should U.S. policymakers do about AI?

Policymakers must take the implications of AI more seriously. The proliferation of this technology among like-minded authoritarian regimes presents a big long-term risk.

But authoritarian regimes are not the only ones who can misuse AI. Democracies must look inward and develop their own regulatory frameworks. Two relevant problems are implicit bias and reinforced discrimination in algorithms.

When AI learning is used in policing or healthcare, for instance, it can reinforce existing inequalities and produce or perpetuate discriminatory behavior. For example, the criminal-justice sector has been an early adopter of AI-based predictive analysis. But some studies reveal that the programs used frequently rely on biased data. Crime statistics show that African Americans are far more likely to be arrested than Caucasians.

Yet machine algorithms rarely consider that police bias may be the reason for disproportionate African American arrests. Instead, the default algorithmic assumption is that African Americans are more prone to commit crimes. This dubious conclusion forms the basis for the algorithms’ future predictions. This underscores a vital principle: AI machines are only as good as the data with which they are trained.

To make sure that AI is used responsibly, there must be stronger connections between policymakers, engineers, and researchers. In other words, those responsible for designing, programming, and implementing AI systems should share responsibility for applying and upholding human-rights standards. Policy experts should have regular, open conversations with engineers and technologists, so that all three groups are aware of the potential misuses of AI. That way, they can develop the right responses early on.

Read Steven Feldstein’s article on how artificial intelligence is reshaping government repression in the Journal of Democracy.

About the Author

Senior Fellow, Democracy, Conflict, and Governance Program

Steve Feldstein is a senior fellow at the Carnegie Endowment for International Peace in the Democracy, Conflict, and Governance Program. His research focuses on technology, national security, the global context for democracy, and U.S. foreign policy.

- What We Know About Drone Use in the Iran WarQ&A

- Are All Wars Now Drone Wars?Q&A

Jon Bateman, Steve Feldstein

Recent Work

Carnegie does not take institutional positions on public policy issues; the views represented herein are those of the author(s) and do not necessarily reflect the views of Carnegie, its staff, or its trustees.

More Work from Carnegie Endowment for International Peace

- Europe on Iran: Gone with the WindCommentary

Europe’s reaction to the war in Iran has been disunited and meek, a far cry from its previously leading role in diplomacy with Tehran. To avoid being condemned to the sidelines while escalation continues, Brussels needs to stand up for international law.

Pierre Vimont

- India Signs the Pax Silica—A Counter to Pax Sinica?Commentary

On the last day of the India AI Impact Summit, India signed Pax Silica, a U.S.-led declaration seemingly focused on semiconductors. While India’s accession to the same was not entirely unforeseen, becoming a signatory nation this quickly was not on the cards either.

Konark Bhandari

- What We Know About Drone Use in the Iran WarCommentary

Two experts discuss how drone technology is shaping yet another conflict and what the United States can learn from Ukraine.

Steve Feldstein, Dara Massicot

- Beijing Doesn’t Think Like Washington—and the Iran Conflict Shows WhyCommentary

Arguing that Chinese policy is hung on alliances—with imputations of obligation—misses the point.

Evan A. Feigenbaum

- Axis of Resistance or Suicide?Commentary

As Iran defends its interests in the region and its regime’s survival, it may push Hezbollah into the abyss.

Michael Young