Supporters of democracy within and outside the continent should track these four patterns in the coming year.

Saskia Brechenmacher, Frances Z. Brown

Source: Getty

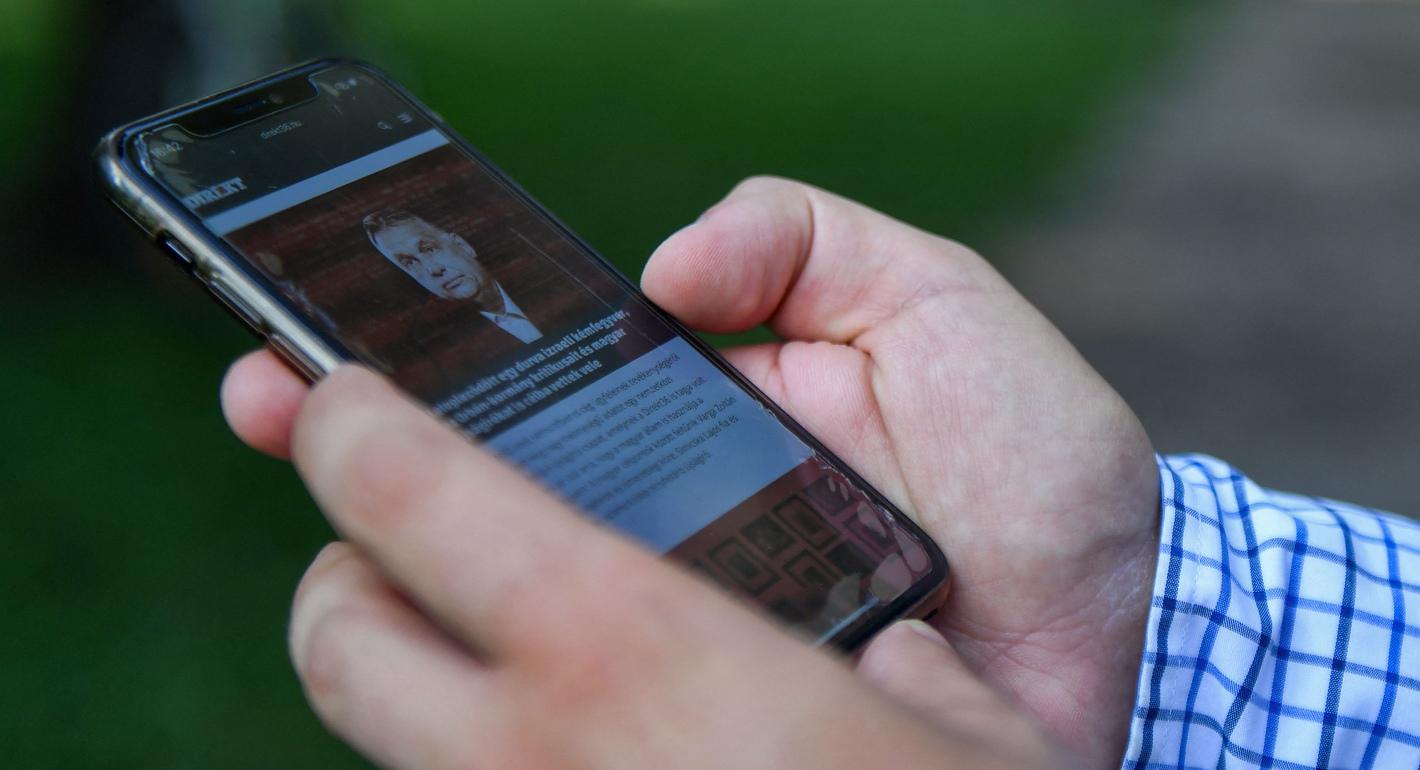

Disinformation and influence campaigns from domestic and international actors have thrived during the pandemic. European policies that build public trust in democratic institutions should be accompanied by regulation of online platforms that focuses on transparency and accountability.

Disinformation has become a central subject of debate and concern in Europe. Influence operations have particularly thrived in the context of the coronavirus pandemic, fostering divisions and lowering trust in public institutions. Influence operations not only threaten Europe’s stability because of the anti-system narrative they fuel, but they also have the potential to stimulate racially motivated violence and harm deliberative democracy.

European governments are moving into a new phase in their efforts to counter disinformation. Our recent project with The Hague Program for Cyber Norms looked at how the governments of several European countries (France, Germany, Hungary, Serbia, Sweden, and the United Kingdom) have adjusted their counter-disinformation strategies during the pandemic. This identified two major trends. First, governments are realizing that the distinction between domestic and foreign disinformation has become increasingly obsolete. Second, alongside their attempts to regulate online platforms, governments are starting to think more about the democratic character of their counter-disinformation measures.

As influence operations have become faster and cheaper in the digital environment, resulting in heightened activity by malicious actors, most European governments have designed new policies to tackle these operations. In the last decade, most of the governments’ policies have centered on foreign, state-sponsored disinformation, notably from Russia. Influence operations—such as those surrounding the downing of flight MH17, the leaks of French President Emmanuel Macron’s email, and the assassination attempt on former Russian intelligence officer Sergei Skripal—all pointed toward the Kremlin.

In and beyond Europe, governments have feared the impact of foreign influence operations on elections. European states have progressively bolstered their counter-disinformation efforts, the most advanced in their efforts being the Czech Republic, Estonia, Finland, Latvia, Lithuania, Sweden, and the United Kingdom. In 2018, the EU published its first Action Plan Against Disinformation, which pointed to pro-Kremlin disinformation as “the greatest threat to the EU.”

The past few years have seen China develop more systematic influence operations, although these are not yet as effective as those of other actors like Russia. “We need similar but more adapted capacity to deal with the other major challenger of our approaches, China,” said a high-ranking official from the European External Action Service in 2019 during an interview. Russia and China have weaponized the pandemic to further the narrative that liberal democracies are corrupt and incompetent. They have done so by spreading disinformation on the economic, health, and social effects of the virus with the aim of deepening political, racial, and economic divisions. As argued by Rasmus Kleis Nielsen and Richard Fletcher, the digitalization of the media environment has led to “democratic creative destruction.”

However, the current crisis has also clearly demonstrated the role of domestic actors in the spread of disinformation as they relay conspiracy theories and dubious health advice, indirectly supporting external narratives. Research has suggested that human users are the main amplifiers of online propaganda, not bots. Our project’s national reports all find that the bulk of COVID-19-related disinformation in EU member states was driven domestically and not from external sources. In France, populist movements have capitalized on existing divisions deepened by the pandemic to gain traction. In Hungary, the government implicitly allows the circulation of disinformation asserting Western failure and Asian excellence in pandemic management. “To what extent are we scapegoating [others] and ignoring domestic sources of disinformation?” asked an expert in one of our scholar-practitioner workshops. With even European leaders, such as British Prime Minister Boris Johnson and Hungarian Prime Minister Viktor Orbán, spreading false information, it becomes difficult to discount the internal factors that fuel the disinformation machine.

The digital and intermestic (connecting domestic and international policy) nature of information manipulation is what makes it difficult to address. Online influence operations are extremely fuzzy as they largely depend on the relay of data by secondary and often private actors to reach their target audience. These information networks operate globally, not only on mainstream social media (such as Facebook, Twitter, and YouTube) but also on forums like Reddit, Discord, and 4chan or encrypted messaging apps such as WhatsApp and Telegram. Influence operations often use bots and rely on algorithmic amplification, allowing messages to spread rapidly. Responses to so-called clickbait posts that are designed to trigger emotional responses amplify their reach.

Consequently, countering disinformation requires extremely broad strategies. Deterrence and debunking are not enough. They may even have adverse effects. Responses must be accompanied by more transparency from and accountability over online platforms. Effective strategies require a comprehensive and borderless approach rooted in international collaboration.

European policy debates on disinformation have begun to change in recognition of these complexities. They have shifted from concerns over external election interference and a focus on foreign actors (mainly Russia and China) to a better understanding of how these dynamics operate alongside domestic and internal factors. This affects policy responses, which now focus not only on external political actors but increasingly also on tech companies, domestic individuals, and internal political actors. In the United Kingdom, the Cabinet Office; the Department for Digital, Culture, Media, and Sport; the Department of Health and Social Care; and the Foreign, Commonwealth and Development Office have tackled domestic and foreign false information related to the pandemic, in alignment with the national security Fusion Doctrine mandated in 2018.

The distinction between foreign and domestic disinformation is increasingly blurred across Europe as messages serve diverse actors across borders, whether they are connected or not. Perhaps most importantly, this highlights the fine lines between disinformation, influence operations, and public diplomacy. It is notoriously difficult to measure the effectiveness of disinformation and counter-disinformation measures because it is difficult to clearly define their goals. How can one determine the intention behind false news when it differs depending on the beholder’s standpoint? Where should governments draw the fine line between public diplomacy and propaganda? And when strategic communication is designed to fight but also spread different types of disinformation, as well as to exercise soft power, which measures are suited and ethically acceptable for European states to achieve such diverse objectives?

Among the wide array of tools used to counter disinformation and influence operations, the regulation of online platforms has been high on the European agenda. The European Commission’s Digital Services Act (DSA) and Digital Markets Act (DMA) propose new measures that would increase the transparency and accountability of platforms as well as researchers’ access to their data. Noncompliance with these rules could result in fines for the tech giants. Nevertheless, the ambitious proposals are still under debate and have been criticized for their lack of focus on the rights of individual users. EU member states are overall supportive of the acts, but discussions have already revealed two key points of contention: enforcement and content moderation. The British equivalent to the DSA and DMA, the Online Safety Bill, has also come under fire for its potential damage to free speech.

European governments have exhibited different perspectives on regulating digital platforms. This undermines the legislative process of the DSA and DMA and, by extension, the development of a pan-European response to disinformation. Governments differ on what should be the subject of content moderation—that is, on whether it should only focus on illegal content (the more liberal approach) or also harmful content such as false information (a more restrictive approach). France and Germany enacted restrictive national laws against election misinformation in 2018 and online hate speech in 2017, respectively. Other European states such as Austria, Bulgaria, Lithuania, Malta, Romania, and Spain have recently introduced or modified regulations to fight disinformation. However, recent controversies and court rulings surrounding state fact-checking, new legislation, and online content moderation practices have heightened caution on this subject and put users’ rights and freedoms at the center of the debate, pushing some authorities towards the more liberal approach.

Northern European states, which pride themselves on their culture and history of protecting of freedom of speech and individual rights, have a more liberal approach. Sweden, for instance, would like to limit moderation under the DSA to strictly illegal content and to focus on enhancing the transparency of social media companies. At the opposite end of the spectrum, European governments with authoritarian tendencies have had few qualms about punishing those guilty of spreading fake news while themselves spreading disinformation against political opponents. In Hungary, the 2020 Enabling Acts give the government the power to rule by decree and allowed for making the dissemination of fake news a criminal act punishable with up to five years of imprisonment. Outside the EU, in Serbia, a 2006 law criminalizes the creation and spread of false information that disturbs public order, making this punishable with a prison term of up to five years. While these laws have led to arrests and have dissuaded the public, they also serve political purposes as they have been used to silence opposition and criticism of the regimes’ handling of the coronavirus pandemic.

The European debate on regulating online platforms has raised two important questions. First, how effective is regulation to tackle disinformation and/or to counter influence operations? Second, what are its implications for democracy? Measures enforcing more transparency and accountability online are necessary to protect users’ human and civil rights. Search engines, advertising, and algorithms facilitate the creation and spread of disinformation when operating in an information system that benefits businesses over consumers. What is more, online platforms evolve and multiply quickly, and their regulation needs to remain comprehensive and up to date—no mean feat given the difficulties in aligning European positions.

Regulating online platforms risks furthering the narrative that some European governments have been trying to increase their control over populations and information, a narrative that has been particularly visible since the start of the coronavirus pandemic. Notice-and-takedown systems and obligations lead platforms to over-censor content in order to avoid legal liability. This could not only stimulate the creation and use of alternative platforms that are less regulated and more difficult to monitor but also aggravate the crisis of trust in public institutions and democracy. These conditions foster the formation of extreme political views, public dissatisfaction, polarization, and conflict.

European governments are somewhat broadening their approaches to take on board such lessons. In France, where digital skills are a mandatory part of the school curriculum, the Ministry of Education has designed material on “scientific disinformation” to enable high school students to critically interact with pandemic-related news. In the United Kingdom, the government has just released an Online Media Literacy Strategy, under which new initiatives include a media literacy taskforce, a UK media literacy forum, and a media literacy comms campaign. In Sweden, the Committee on National Investment in Media and Information Literacy has been tasked with working to increase people’s resilience to disinformation propaganda and cyber hatred. An agency for psychological defense is also to be established in 2022. In March, Germany launched a Digital Education Initiative that includes a new learning app and a national education platform. At the EU level, the Democracy Action Plan promises to promote free and fair elections, strengthen media freedom and pluralism, and counter disinformation for more resilient democracies.

Even if the impact of digital literacy remains to be proven, governments will need to support many such initiatives, as online content regulation only treats the symptoms rather than the cause by addressing information manipulation separately from public distrust. Some governments are beginning to appreciate that higher degrees of education are associated with less distrust in news and higher exposure to false information is linked to less media trust.

For the regulation of online platforms to be effective, it must focus on procedural transparency and accountability (rather than content moderation) as well as allow for more competition and pluralism. The use of decentralized social networks enabled by blockchain technology presents promising prospects and has already attracted researchers’ attention. Moreover, regulation should be complemented by policies that directly target citizens’ confidence in governance, including through civic education, digital and media literacy, and public-interest journalism.

Considering the growing impact of disinformation and influence operations, European states have recently designed new policies that have revealed divergences among them as well as raised new questions of effectiveness and legality. First, the blurring lines between domestic and foreign influence have entangled the setup of policy responses. Second, the drafting of regulation for online platforms has uncovered major implications for democracy and digital governance well beyond Europe. A new phase is beginning in counter-disinformation, but the changing nature of the challenge means a lot more remains to be done.

If Europe wants to address information manipulation durably, it must design policies that halt both its spread and the narratives on which it feeds. New regulation must bring about structural change in the current business-oriented information order. Content moderation risks contributing to polarization and having a detrimental impact on free speech. To tackle the narratives on which information manipulation feeds, the governance of online platforms must be accompanied by policies that build public trust. European governments and EU institutions must focus even more strongly on the benefits of liberal democracy and pursue strategies rooted in the core values they defend: human rights, education, transparency, and inclusive dialogue. As argued elsewhere, young Europeans expect a lot from the EU when it comes to democracy—this calls for fundamental discussions on the future of information flows in Europe.

Sophie Vériter is associate fellow at The Hague Program for Cyber Norms, Leiden University and coordinated the project from which this article draws. This project looked at how the governments of several European countries (France, Germany, Hungary, Serbia, Sweden, and the United Kingdom) have adjusted their counter-disinformation strategies during the pandemic.

This article is part of the European Democracy Hub initiative run by Carnegie Europe and the European Partnership for Democracy.

Carnegie does not take institutional positions on public policy issues; the views represented herein are those of the author(s) and do not necessarily reflect the views of Carnegie, its staff, or its trustees.

Supporters of democracy within and outside the continent should track these four patterns in the coming year.

Saskia Brechenmacher, Frances Z. Brown

On January 12, 2026, India's "workhorse," the Polar Satellite Launch Vehicle, experienced a consecutive mission failure for the first time in its history. This commentary explores the implications of this incident on India’s space sector and how India can effectively address issues stemming from the incident.

Tejas Bharadwaj

In return for a trade deal and the release of political prisoners, the United States has lifted sanctions on Belarus, breaking the previous Western policy consensus. Should Europeans follow suit, using their leverage to extract concessions from Lukashenko, or continue to isolate a key Kremlin ally?

Thomas de Waal, ed.

Venezuelans deserve to participate in collective decisionmaking and determine their own futures.

Jennifer McCoy

What should happen when sanctions designed to weaken the Belarusian regime end up enriching and strengthening the Kremlin?

Denis Kishinevsky