- +3

Ian Klaus, Mark Baldassare, Rachel George, …

Source: Getty

Technology Federalism: U.S. States at the Vanguard of AI Governance

U.S. states have been acting as regulators of first resort on a number of emergent technologies and core tech policy areas where Congress has been slow to legislate. Nowhere is this emerging technology federalism more widely apparent and potentially consequential than in the state-level response to AI.

Well before artificial intelligence (AI) exploded into the public consciousness with the 2022 launch of ChatGPT, advances in a suite of machine learning technologies were rapidly expanding the use of AI systems. As this occurred, societal perceptions of the technology sector were also entering a period of flux. In short, AI came of age alongside a dramatic increase in global attention to the relationship between consumer technologies and society.1

Consequently, generative AI models powered by deep neural networks (complex computational systems originally influenced by the architecture of neurons in the human brain) arrived on the global policy agenda at a time when regulators were primed for action. In the United States, their transformative implications have prompted a swift and energetic response from the federal government—albeit one facing considerable uncertainty as the 119th Congress and a new presidential administration take office.

Less recognized, however, has been a parallel response—equally active but less coordinated—at the subnational level. U.S. states, fulfilling their canonical role as laboratories of democracy, have been acting as regulators of first resort on a number of emergent technologies and core tech policy areas where Congress has been slow to legislate.2 In some instances, individual states have acted as first movers.3 In others, networks of state officials and organizations working across state lines have collaborated to foster collective action. Nowhere is this emerging technology federalism more widely apparent and potentially consequential than in the state-level response to AI.

AI in a Changing Policy Environment

In the United States, polling indicates that positive public sentiment toward the internet industry peaked in 2015, and then began declining as negative sentiment rose.4 In 2018, the term “techlash” entered the global lexicon.5 By 2020, strong majorities of Americans were “very concerned” about both the spread of misinformation and the privacy of personal data online.6 These concerns combined with a range of discontents, including worries over competition, extremism, and the political process.7 By the decade’s end, even amid robust growth within the tech sector, these anxieties were already prompting a global policy response.

Regulatory pushback had crystalized earlier and forcefully in Europe. A suite of European Union (EU) laws, including the General Data Protection Regulation (GDPR) and the Digital Services Act and Digital Markets Act came into effect in 2018 and 2022, respectively, while high-profile competition proceedings and litigation over individual digital rights created an increasingly stringent regulatory environment for technology firms. Over the ensuing years, elements of the European approach spread to other markets, including through digital privacy laws incorporating elements of the GDPR regime in Asia and the Americas. On its face, this diffusion bore hallmarks of the “Brussels Effect” posited by Anu Bradford, wherein the regulatory power of the European Union establishes global standards through a combination of overt emulation and de facto acceptance beyond EU borders.8

The United States, for its part, pursued a comparatively market-oriented, light-touch approach to technology policy, reflecting prior commitments to free markets and free speech.9 Nevertheless, over the past five years, there has been significant tightening across political boundaries. From Senator Elizabeth Warren’s call to break up major tech companies during her 2020 presidential campaign to President Donald Trump’s calls to roll back Section 230 of the Communications Decency Act, by 2022, a relatively bipartisan and supportive policy environment had grown distinctly more complicated.10

In short, two trends were shaping the global tech policy environment even before AI rose to the fore. The first was a set of distinct and competing governance paradigms, with Europe comparatively stringent and the United States more laissez faire.11 The second was growing discontent over perceived overreach by technology platforms and harms wrought by their products and services.12 In the early and mid-2010s, as neural networks trained using graphics processing units were beginning to demonstrate their promise, tech companies, especially in the United States, were still widely feted for their innovation and success.13 By the end of the decade, as the computer programs AlphaGo and AlphaZero were outpacing the world’s best human competitors and OpenAI was being formed, GDPR was reshaping the digital privacy landscape and Cambridge Analytica scandal was coming to light.14 By the early 2020s, digital governance was a well-established front-burner issue for regulators around the world.15

These developments set the scene for a relatively quick policy response just as breakthroughs in AI development coupled with massive private investment to yield highly capable generative models now used by hundreds of millions of people.16

The Shifting U.S. Landscape

In the United States, attention has focused on flagship federal policies such as president Joe Biden’s 2023 Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence; president Trump’s 2025 order revoking it and directing the development of a new AI Action Plan; the Senate’s 2024 bipartisan road map; and the launch of the U.S. AI Safety Institute.17 These are important developments, though their overall impact on the trajectory of AI development and deployment remains to be seen. Trump’s return as U.S. president adds significantly to this uncertainty and may dramatically reorient federal AI policy.

Even during the Biden administration, however, amid persistent—though not absolute—congressional gridlock and lingering tensions over digital governance between the United States and key allies, there was a durable perception in some quarters of the American approach as permissive, sclerotic, and insufficiently rights-protective.18

That perception, however, has masked a dramatic shift in U.S. policymaking at the subnational level—a shift that has put U.S. states at the vanguard of American AI law. In state capitals, as much as in Washington, DC, the wheels of policymaking have been spinning full bore. States have introduced a vast array of bills, passed laws, launched programs, and undertaken reorganization efforts.19 Action has not been confined to executives or legislatures alone, nor to red or blue states. And while this trend poses a range of questions, it has advanced with such sufficient speed and energy that, today, meaningfully appraising the extent and direction of American technology policy requires attention to a dynamic and burgeoning state landscape.

The Privacy Antecedents of Technology Federalism

Since 2018, when California enacted the California Consumer Privacy Act, no fewer than nineteen states have adopted comprehensive consumer privacy laws, of which a majority regulate the use of automated decisionmaking or profiling systems.20 These privacy laws have established an initial patchwork of regulation applicable to AI as they set new digital privacy rules over the past several years.

This crop of state rules regulating automated decisionmaking focuses on applications producing legally consequential effects (for example, in providing or withholding public benefits, financial services, housing, education, employment, and healthcare or in law enforcement).21 For these applications, states frequently mandate disclosure, impact assessments, and opt-out rights for data subjects. These requirements echo GDPR Article 22, which establishes a qualified “right not to be subject to a decision based solely on automated processing, including profiling, which produces legal [or similarly significant] effects.”22

While not cast as AI laws per se, these privacy obligations extend to AI systems, which involve just this sort of automation.23 As a result, state privacy laws have set a preliminary baseline of subnational AI policy. They have also established a predicate for further and more overt policies impacting AI.

The Surge in State-Level Policymaking on AI

States have progressed quickly from regulating AI incidentally to doing so directly with a mix of supportive and supervisory policies. Like policymakers around the globe and in Washington, states are pursuing a dual imperative: to foster AI for the economic, social, and scientific benefits it promises, while safeguarding society against its potential harms. For example, Biden’s 2023 executive order speaks of AI’s “promise and peril” and seeks to promote “responsible innovation.”24 Similarly, California Governor Gavin Newsom’s executive order on generative AI (GenAI) observes that “GenAI can enhance human potential and creativity but must be deployed and regulated carefully to mitigate and guard against a new generation of risks.”25 In Pennsylvania Governor Josh Shapiro’s executive order, he notes the technology’s potential to help state agencies serve Pennsylvanians while urging that its “responsible and ethical use . . . should be conducted within a governance structure that ensures transparency, tests for bias, addresses privacy concerns, and safeguards [the state’s] values.”26

Exploratory Efforts

Many states have established new task forces or designated existing agencies to study the ramifications of AI systems. These exploratory bodies are directed to make recommendations on fostering AI’s benefits or growth in the state, promoting its responsible development and use, and leveraging AI for public service delivery. In Maryland, for example, Governor Wes Moore created an AI Subcabinet tasked with developing and implementing an “AI Action Plan” and crafting policies and internal resources to embed values such as equity, innovation, reliability, and safety into the state’s AI workflows.27

Other states, including Massachusetts and Rhode Island, have chartered public-private AI task forces to perform similar duties, such as assessing the risks and opportunities AI presents and making recommendations to inform further policy development and state operations. Massachusetts created a task force that comprises representatives from state and local government, industry, organized labor, and academia.28 Rhode Island paired its exploratory charter with the establishment of a unified data governance structure to promote intergovernmental collaboration, set standards for data sharing, and help promote the state’s AI readiness.29

States have also taken exploratory steps through legislation. In March 2024, Utah enacted one of the nation’s first AI-focused consumer protection laws, SB 149, the Artificial Intelligence Policy Act. The law combines a comparable exploratory mandate with new state infrastructure for public-private collaboration.30 It establishes an Office of Artificial Intelligence Policy to work with industry and civil society on regulatory proposals “to foster innovation and safeguard public safety.”31 Interestingly, it also creates a “Learning Laboratory” program that encourages experimentation by offering temporary regulatory mitigation agreements to AI developers and deployers to enable them to test applications within the state.

Transparency and Misinformation

The rapid advancement and adoption of generative AI models has raised hope for a new flowering of human knowledge and discovery. Simultaneously, it has prompted warnings for a coming age of misinformation, in which truth is elusive, trust is scarce, and the informational underpinnings of democratic self-government—already straining—are further eroded.32

These worries take many shapes. They include warnings about deepfakes and synthetic content and about “hallucinations” causing models to present fabricated information as fact.33 They encompass fears that generative models will supercharge the ability of malicious actors to wage influence operations.34 And they include worries that models will free ride copyrighted data, eroding traditional newsgathering and pressuring the viability of media organizations already reeling from prior waves of technological change while failing to apply comparable editorial standards.35

In the United States, these concerns exist against a backdrop of robust legal protection for free expression, a tradition in which, as Justice Brandeis famously wrote, the remedy for harmful speech is more speech, and efforts to police misinformation face broad suspicion.36 In short, policymakers face a landscape marked by worries over misinformation but also significant reticence to restrain it.

Some early-moving U.S. states, however, have experimented with policies to mitigate AI-related misinformation, particularly by fostering transparency. Utah’s Artificial Intelligence Policy Act requires generative AI systems such as chatbots to “clearly and conspicuously disclose,” if asked or prompted by a person interacting with the system, that they are AI tools and not human.37 When an AI system is used to provide “the services of a regulated occupation,” such as healthcare or investment advising, its deployer must ensure that this disclosure is made proactively at the start of the exchange, conversation, or text chat.38

Other states are following suit. Bills instituting disclosure requirements, watermarking obligations, or seeking to curb misleading uses of AI have been introduced in at least ten states.39 Similar to Utah’s act, the bills include measures with broad applicability, such as, California’s new AI Transparency Act, signed by Newsom in September 2024.40 As of 2026, that law will require “covered providers” of generative AI systems used by more than 1 million aggregate monthly users to incorporate digital watermarking—that is, a machine-readable “latent disclosure”—in images, video, and audio content created by their systems.41 It will also require providers to give users the ability to include visible or audible disclosures in content they create or modify, and to offer freely available detection tools enabling users “to assess whether image, video, or audio content . . . was created or altered by the covered provider’s GenAI system.”42

Some states are focusing on discrete applications, such as political campaigns. For example, both California and Florida enacted legislation in 2024 requiring disclosures in qualified political advertisements created or modified using AI.43 Florida’s law requires a prominent disclosure in political advertisements and other electioneering communications that use AI to depict a real person performing an act that did not occur with the intent to injure a political candidate or mislead the public about a ballot issue.44 Comparable bills have been introduced elsewhere.45

Other states have weighed transparency requirements for the use of AI in the workplace. Legislation introduced in Washington state in 2024, for example, would require employers to provide written disclosure before or within thirty days of beginning to use AI “to evaluate or otherwise make employment decisions regarding current employees.”46 The same legislation would also prohibit employers from using AI to replicate an employee’s likeness or voice without the employee’s explicit consent.47

AI Safety

Policymakers and technologists around the world are racing to assess the safety implications of AI models. National governments and international organizations have convened successive AI safety summits and established a nascent network of safety institutes, while legislative and executive measures have all sought to mitigate safety and security risks.48 At the same time, the AI safety landscape is highly contested, marked by starkly divergent views on the nature, likelihood, and severity of potential risks, as well as the cost-benefit ratio of precautionary guardrails.49 The reelection of Trump, who has already acted to repeal the Biden executive order and returns to the White House promising to chart a new course, adds significant uncertainty to the outlook for U.S. federal policy and existing diplomatic initiatives.50

Nevertheless, AI safety will remain on the diplomatic agenda. In the aftermath of the U.S. election, for example, members of the newly formed International Network of AI Safety Institutes gathered in late November 2024 in San Francisco to prepare for the upcoming Paris AI Action Summit this February. Furthermore, and importantly, AI safety policies are already embedded in model developers’ processes. Voluntary commitments agreed to by developers, such as those brokered by the White House in 2023 and the United Kingdom and South Korea in 2024, remain at least partly in place.51 And AI companies’ internal policies—such as OpenAI’s Preparedness Framework, Anthropic’s Responsible Scaling Policy, and Google DeepMind’s Frontier Safety Framework—demonstrate an awareness that AI safety is an important element of model development and governance. Finally, state-level liability rules embedded in tort law ensure that technology companies must weigh their financial exposure in the event that their models cause reasonably foreseeable injury or financial loss.52

Given the attention AI safety has received on the national and diplomatic stages, it is perhaps surprising how much a single U.S. state, California, featured in the global AI safety debate during the past year. That becomes less surprising, however, in view of California’s outsized profile in the global technology ecosystem, its role at the center of AI development, and its history of setting nationally and globally relevant standards in policy domains ranging from the environment to consumer protection: referred to as the “California Effect.”53

California State Senator Scott Wiener’s SB 1047 bill, the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act, touched off a pitched debate in 2024, exposing divisions among AI industry leaders, prominent AI researchers, and policymakers even in a jurisdiction marked by single-party control.54 SB 1047 would have imposed a duty of care on developers of the most sophisticated forthcoming AI models, requiring them to avoid causing or materially enabling catastrophic harms and obligations to develop written safety protocols and build the capability to shut down models within their control. Despite concerns over the bill, SB 1047 survived furious opposition and multiple rounds of revision to pass the California legislature.

While Newsom ultimately vetoed SB 1047, he did so while expressing support for its underlying objectives and its preventive approach to catastrophic risk. He pledged to work with the legislature on a new AI safety proposal in the 2025 legislative session. Importantly, from a technology federalism perspective, he specifically defended the role of states at the forefront of AI safety governance:

To those who say there’s no problem here to solve, or that California does not have a role in regulating potential national security implications of this technology, I disagree. A California-only approach may well be warranted—especially absent federal action by Congress.55

In short, while safety remains at the forefront of national, international, and private sector efforts, it appears likely that California will continue making its own mark on a fast-moving, contested, and highly consequential global debate. Following Trump’s return to the White House, pressure on California to act as a counterweight to his policy agenda may grow. Likewise, other states may feel increased urgency, or see an opportunity, to establish AI safety standards individually or in concert if the federal government strikes a more accommodative stance.

Fairness and Discrimination

While safety questions have exposed fissures within the AI community, there has been agreement in many quarters that AI models present other risks that also require attention, such as the potential for discrimination. Researchers have warned that historical bias and disparate treatment reflected in the data used to train large language models can subtly and powerfully manifest in their predictive outputs, encoding and extending past injustices into a growing range of AI applications.56 AI could also render bias less scrutable through its incorporation into complex models whose human creators cannot anticipate outputs or disaggregate the inputs producing a given result.

Federal policymakers over the past several years have recognized these risks. The Biden administration’s Blueprint for an AI Bill of Rights warns that “algorithms used in hiring and credit decisions have been found to reflect and reproduce existing unwanted inequities or embed new harmful bias and discrimination.”57 It goes on to state that “designers, developers, and deployers of automated systems should take proactive and continuous measures to protect individuals and communities from algorithmic discrimination,” including through “proactive equity assessments” and “use of representative data and protection against proxies for demographic features.”58 It further endorses impact assessments and outside evaluations as measures to pressure test and confirm system fairness.

The Civil Division of the U.S. Justice Department has also taken steps to coordinate interagency efforts on AI nondiscrimination, and a number of federal agencies have issued a Joint Statement on Enforcement of Civil Rights, Fair Competition, Consumer Protection, and Equal Opportunity Laws in Automated Systems.59 Meanwhile, the Artificial Intelligence Risk Management Framework established by the National Institute of Standards and Technology cautions that “AI systems can potentially increase the speed and scale of biases and perpetuate and amplify harms to individuals, groups, communities, organizations, and society.”60 Prominent legislators have also voiced their concern, with some arguing that algorithmic discrimination presents a clearer and more present issue than catastrophic safety risks.61

The Trump administration appears poised to chart a substantially different course on issues of equity and nondiscrimination.62 However, despite this gathering federal change, state-level policymaking has arguably been more definitive in seeking to mitigate the risk of AI-enabled discrimination. Colorado has been the first mover, enacting legislation (SB 24-205) in May 2024 to impose a duty of care on developers and deployers of “high risk artificial intelligence systems”—in other words, those with a material effect on consumers’ access to education, employment, lending, essential government services, housing, insurance, or legal services.63

The Colorado law, which is due to take effect in 2026, also imposes disclosure and governance obligations. It requires developers to provide deployers with information about (1) the uses, benefits, and risks of their systems; (2) their training data; and (3) their implementation of evaluation, governance, and mitigation efforts to prevent algorithmic discrimination. Deployers, in turn, must maintain a Risk Management Policy and Program, specifying how they will identify and mitigate discrimination risk. Before an AI system can be used to make a “consequential decision,” the deployer must disclose the use of AI to impacted consumers in a timely manner and in plain language. In the event of an adverse decision, such as a denial of benefits, the law mandates that consumers be afforded an additional explanation as well as the right to correct inaccurate information that contributed to the decision and the right to appeal to a human decisionmaker.

While the Colorado law stands as an example of determined state action, it also underscores a core takeaway from California’s experience with SB 1047. There remains significant trepidation, even within traditional policy coalitions, on how best to strike a balance between fostering and regulating AI. Colorado’s SB 24-205 passed despite significant concerns from Governor Jared Polis, who cautioned that the bill could overburden industry, stifle innovation, and contribute to a thicket of inconsistent state rules and that it lacked essential guardrails such as an intent requirement.64

The Colorado legislation was not developed in a vacuum, however. Rather, it emerged from a broad multistate effort to coordinate baseline obligations for AI systems. That collaboration was spearheaded by Connecticut State Senator James Maroney, who convened a working group of more than 100 state legislators from more than two dozen states as well as a second Connecticut-specific group to explore potential impacts and regulatory approaches to AI.65 In partnership with lawmakers from Colorado and elsewhere, Maroney and the Connecticut group produced a draft bill on algorithmic discrimination, SB 2, which served as the template for Colorado’s SB 24-205.66

SB 2 passed the Connecticut senate last April and appeared to have significant support in the House before industry opposition persuaded the state’s governor, Ned Lamont, to threaten a veto, derailing it in the 2024 legislative session.67 However, Maroney promptly pledged to renew his efforts, and, joined by a majority of the state’s senators, he has already proposed a successor bill in the 2025 session.68

Maroney has predicted that “a dozen or more states” will propose comparable legislation.69 Thus, while it is premature to say whether the approach embodied in Connecticut’s bill and Colorado’s newly enacted law will take root nationally, it appears likely that state policymakers will continue working to mitigate bias from AI applications impacting consequential decisions about their residents’ lives.

Sovereign AI Efforts and Public Interest Compute

The most advanced AI models have typically required immense inputs of data and computing power to develop. Training-run costs approach or exceed $100 million for some released models, and this figure has been projected to pass $1 billion by 2027.70 In recent days, however, DeepSeek, a Chinese AI developer, has dramatically upended these projections—and the assumptions underpinning frontier model development and the global semiconductor industry—by releasing R1, an advanced model approximating the performance of its leading American counterparts.71 R1 was apparently developed using vastly lower computing power and at markedly lower cost than the most cutting-edge U.S. incumbents.72

The full implications of DeepSeek’s accomplishment are still coming into focus. However, computing infrastructure remains a vital and highly expensive prerequisite to advanced AI research and development. Access to sophisticated GPU processors remains finite. And while DeepSeek’s progress in efficient model development may impact long term chip demand, computing costs for competitive private sector AI developers have already skyrocketed in recent years.73 OpenAI, for example, was projected to spend more than $5 billion on computing costs in 2024 alone.74 Fueling these outlays, AI firms have raised and invested well over $100 billion in the past year.75

Massively capitalized and with unmatched access to the resources needed for cutting-edge development, large AI developers are rapidly outpacing the public and not-for-profit sectors in their ability to recruit the third critical input for AI research and development: highly skilled researchers, engineers, and other talent upon whom cutting-edge development depends.

These barriers have prompted worry that progress will increasingly concentrate within a handful of institutions, above all private companies with significant commercial interests.76 Accordingly, there is concern that the vast inputs of data, compute, and talent will focus on developing monetizable applications rather than the most public-benefitting scientific and social challenges. Additionally, observers and governments outside the subset of countries where AI development is centered worry that AI will privilege the interests of a few major powers at the expense of the global majority.77

Over the past few years, these concerns have prompted calls for public investment in shared local computing infrastructure, or “public” or “sovereign” compute.78 In 2020, for example, more than twenty leading universities issued a joint letter urging the United States government to create a “national research cloud” to support academic and public interest AI research.79 Warning that brain drain and the skyrocketing cost and diminished accessibility of computing hardware and data were combining to threaten vital research, the signatories recommended that this new national resource pursue at least three aims: to “provide academic and public interest researchers with free or substantially discounted access to the advanced hardware and software required to develop new fundamental AI technologies and applications in the public interest,” to provide “expert personnel necessary to deploy these advanced technologies at universities across the country,” and to “redouble [government agencies’] efforts to make more and better quality data available for public research at no cost.”80

With bipartisan and private sector support, these recommendations were adopted in the National Artificial Intelligence Initiative Act of 2020, which directed interagency efforts—including a task force led by the National Science Foundation (NSF) and the White House Office of Science and Technology Policy—to develop a road map for the creation of such a national resource.81 A final road map and implementation plan were released in January 2023, and subsequently, a pilot was established within the NSF. In July 2023, federal legislation, called the CREATE AI Act, was introduced to establish a National AI Research Resource (NAIRR), though it did not pass before the end of the 118th Congress.82

On the global stage, numerous governments have extolled the importance of national AI capabilities for strategic and economic success in the twenty-first century.83 Increasingly, nations are investing in sovereign AI capabilities, encompassing investments in domestic infrastructure, hardware, data, and expertise, as well as a host of regulatory efforts to advance their competitiveness, promote local innovation and economic growth, and enhance their ability to secure national interests in an age of disruption.

Public computing would therefore seem to be an area where national governments are vigorously engaged and geostrategic imperatives predominate. Nevertheless, a handful of U.S. states have entered the picture, advancing proposals to invest in public-interest computing infrastructure of their own to support socially beneficial research and development.

New York, for example, recently launched a $400 million project called Empire AI to create an AI computing center and support public-interest research and development among a consortium of local universities and foundations.84 The project is designed to help participating institutions—and the state as a whole—attract and retain top-flight AI talent, leverage economies of scale, and pursue public-interest AI research that might otherwise founder for lack of resources.

In California, a similar proposal tucked into the AI safety bill, SB 1047, attracted significant support. While that legislation focused on catastrophic risk reduction, it also included language to create a new public cloud computing cluster called CalCompute to foster “research and innovation that benefits the public” and enable “equitable innovation by expanding access to computational resources.”85 It also required California’s executive branch to provide the legislature with a report detailing the cost and funding sources for CalCompute, recommendations for its structure and governance, opportunities to bolster the state’s AI workforce, and other topics.

While SB 1047 did not become law, CalCompute garnered support even from high-profile opponents of the overall bill.86 Newsom’s pledge to continue working with the legislature on a follow-up AI legislation leaves much for deliberation this year. At a minimum, it is far from certain that CalCompute is off the table. Its resurrection may grow more likely after the recent federal election, as California seeks to demonstrate leadership independent of Washington, DC, and to counterbalance the incoming presidential administration and a Congress whose commitment to durably establishing and funding the NAIRR remains uncertain.

On the one hand, state programs may invite redundancy and are unlikely to match the scale of congressional appropriations. On the other hand, if successful, projects like EmpireAI and CalCompute would secure multiple state objectives.87 First, they would bolster local academic research centers and entrepreneurs, equipping them to compete for talent and pursue socially beneficial but cost-prohibitive research. Second, they would help seed early-stage entrepreneurship, laying the groundwork for innovation and business formation, with all the reputational and economic advantages that could endow. Third, by building out research clusters and hubs of AI development, state-sponsored computing resources would foster network effects and advance the aim—shared by many states—not only to promote their own ability to harness and deploy AI, but also to position themselves as favorable climates for further investment and entrepreneurship. Finally, success in attracting, incubating, and hosting a meaningful share of the burgeoning global AI market could equip states to expand their influence as regulators, positioning them for influence as AI’s deployment impacts their constituents, societies, and operations over the years to come.

Nation-states have long recognized these imperatives and are moving to compete and shape their futures as new capabilities of automation change their domestic and geostrategic environments. Mindful of a comparable risk and opportunity landscape, some U.S. states are likewise placing their bets and seeking a seat at the table.

Conclusion

Across a swath of the most globally charged topics raised by AI, states are at the leading edge of the U.S. policy response. To an as-yet underappreciated extent, this is typical of a new federalism emergent in technology regulation.88 This phenomenon is neither wholly good nor bad, but is, for the time being, an essential facet of U.S. technology policy. As the United States enters a period of uncertainty and potential upheaval, it is likely that state efforts will grow. This expansion may test not only the limits of state authority and political will, but also states’ capacity, individually or in concert, to influence a massive and growing global industry.

In a space as fast moving as AI, prediction is difficult. However, there is strong evidence that issues such as misinformation, safety, bias, and the evolving barriers to research and development will loom large on the policy agenda this year. In each area, it appears that technology federalism will be an important element of the overall U.S. response.

About the Author

Nonresident Scholar, Carnegie California

Scott Kohler is a nonresident scholar at Carnegie California.

- Carnegie California AI SurveyPaper

- State AI Regulation Survived a Federal Ban. What Comes Next?Commentary

Scott Kohler

Recent Work

Carnegie does not take institutional positions on public policy issues; the views represented herein are those of the author(s) and do not necessarily reflect the views of Carnegie, its staff, or its trustees.

More Work from Carnegie Endowment for International Peace

- How Far Can Russian Arms Help Iran?Commentary

Arms supplies from Russia to Iran will not only continue, but could grow significantly if Russia gets the opportunity.

Nikita Smagin

- Is a Conflict-Ending Solution Even Possible in Ukraine?Commentary

On the fourth anniversary of Russia’s full-scale invasion, Carnegie experts discuss the war’s impacts and what might come next.

- +1

Eric Ciaramella, Aaron David Miller, Alexandra Prokopenko, …

- Indian Americans Still Lean Left. Just Not as Reliably.Commentary

New data from the 2026 Indian American Attitudes Survey show that Democratic support has not fully rebounded from 2020.

- +1

Sumitra Badrinathan, Devesh Kapur, Andy Robaina, …

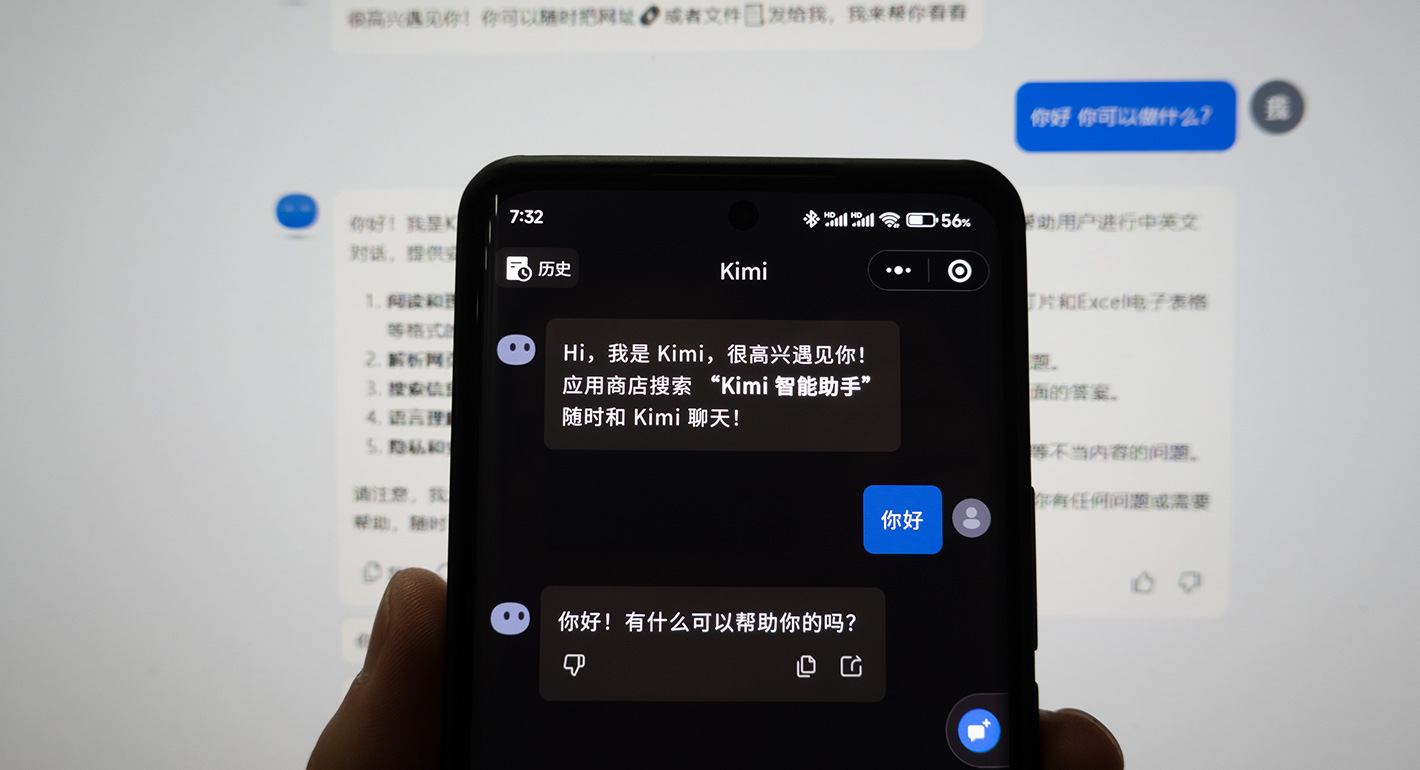

- China Is Worried About AI Companions. Here’s What It’s Doing About Them.Article

A new draft regulation on “anthropomorphic AI” could impose significant new compliance burdens on the makers of AI companions and chatbots.

Scott Singer, Matt Sheehan

- Trump’s State of the Union Was as Light on Foreign Policy as He Is on StrategyCommentary

The speech addressed Iran but said little about Ukraine, China, Gaza, or other global sources of tension.

Aaron David Miller