Jon Bateman, Steve Feldstein

{

"authors": [

"Jon Bateman"

],

"type": "testimony",

"centerAffiliationAll": "dc",

"centers": [

"Carnegie Endowment for International Peace"

],

"englishNewsletterAll": "",

"nonEnglishNewsletterAll": "",

"primaryCenter": "Carnegie Endowment for International Peace",

"programAffiliation": "TIA",

"programs": [

"Technology and International Affairs"

],

"projects": [],

"regions": [

"Canada",

"North America"

],

"topics": [

"Technology"

]

}

Source: Getty

Impact of Disinformation and of Misinformation on the Work of Parliamentarians

In recent years, democracies worldwide have grown increasingly concerned about threats to the integrity of their information environments—including misinformation, disinformation, and foreign influence.

Testimony before Canadian House of Commons, Standing Committee on Access to Information, Privacy and Ethics

Committee members, it’s an honor to appear at this important hearing.

My name is Jon Bateman. I’m a Senior Fellow and Co-Director of the Technology and International Affairs Program at the Carnegie Endowment for International Peace. Carnegie is an independent, non-profit think tank with headquarters in Washington, D.C. and global centers in Europe, Asia, and the Middle East.

In recent years, democracies worldwide have grown increasingly concerned about threats to the integrity of their information environments—including misinformation, disinformation, and foreign influence. My Carnegie colleagues and I have drawn on empirical evidence to clarify the nature and extent of these threats, and to assess the promise and pitfalls of potential countermeasures.

Today I will share some overarching lessons from this research. To be clear, I am not an expert on the Canadian situation specifically, so I may not be able to give detailed answers about particular incidents or unique dynamics in your country. Instead, I will highlight key themes applicable across democracies.

~~~

Let me start with some important foundations.

As you have already heard, misinformation can refer to any false claim, whereas disinformation implies an intentional effort to deceive. Foreign influence can be harder to define, because it requires legal or normative judgments about the boundaries of acceptable foreign participation in domestic discourse—which is sometimes unclear.

Foreign actors often use mis- and disinformation. But they also use other tools—like co-optation, coercion, overt propaganda, and even violence. These activities can pose serious threats to a country’s information integrity.

Still, it is domestic actors—ordinary citizens, politicians, activists, corporations—who are the major sources of mis- and disinformation in most democracies. This should not be surprising. Domestic actors are generally more numerous, well-resourced, politically sophisticated, deeply embedded within society, and invested in domestic political outcomes.

~~~

Defining and differentiating these threats is hard enough. Applying and acting on the definitions is much harder.

Calling something mis- or disinformation requires invoking some source of authoritative truth. Yet people in democracies can and should disagree about what is true. Such disagreements are inevitable and essential for driving scientific progress and social change. Overzealous efforts to police the information environment can transgress democratic norms or deepen societal distrust.

However, not all factual disputes are legitimate or productive. We must acknowledge that certain malicious falsehoods are undermining democratic stability and governance around the world. A paradigmatic example is the claim that the 2020 U.S. presidential election was stolen. This is provably false, it was put forward with demonstrated bad faith, and it has deeply destabilized the country.

Mis- and disinformation are highly imperfect concepts. But they do capture something very real and dangerous that demands concerted action.

~~~

So, what should be done? In our recent report, Dean Jackson and I surveyed a wide range of countermeasures—from fact-checking, to foreign sanctions, to adjustments of social media algorithms. Drawing on hundreds of scientific studies and other real-world data, we asked three fundamental questions: How much is known about each measure? How effective does it seem, given what we know? And how scalable is it?

Unfortunately, we found no silver bullet. None of the interventions were well-studied, very effective, and easy to scale, all the same time.

Some may find this unsurprising. After all, disinformation is an ancient, chronic phenomenon driven by stubborn forces of supply and demand. On the supply side, social structures combine with modern technology to create powerful political and commercial incentives to deceive. On the demand side, false narratives can satisfy real psychological needs. These forces are far from unstoppable. Yet policymakers often have limited resources, knowledge, political will, legal authority, and civic trust.

Thankfully, our research does suggest that many popular countermeasures are both credible and useful. The key is what we call a “portfolio approach.” This means pursuing a diversified mixture of multiple policies with varying levels of risk and reward. A healthy portfolio would include tactical actions, like fact-checking and labeling social media content, that seem fairly well-researched and effective. It would also involve costlier, longer-term bets on promising structural reforms—such as financial support for local journalism and media literacy.

Let me close by observing that most democracies do not yet have a balanced portfolio. They are underinvesting in the most ambitious reforms with higher costs and longer lead times. If societies can somehow meet the big challenges—like reviving local journalism and bolstering media literacy for the digital age—the payoff could be enormous.

Thank you, and I look forward your questions.

About the Author

Senior Fellow and Co-Director, Technology and International Affairs Program

Jon Bateman is a senior fellow and co-director of the Technology and International Affairs Program at the Carnegie Endowment for International Peace.

- Are All Wars Now Drone Wars?Q&A

- The Most Likely Outcomes of Trump’s Order Targeting State AI LawsQ&A

- +1

Jon Bateman, Anton Leicht, Alasdair Phillips-Robins, …

Recent Work

Carnegie does not take institutional positions on public policy issues; the views represented herein are those of the author(s) and do not necessarily reflect the views of Carnegie, its staff, or its trustees.

More Work from Carnegie Endowment for International Peace

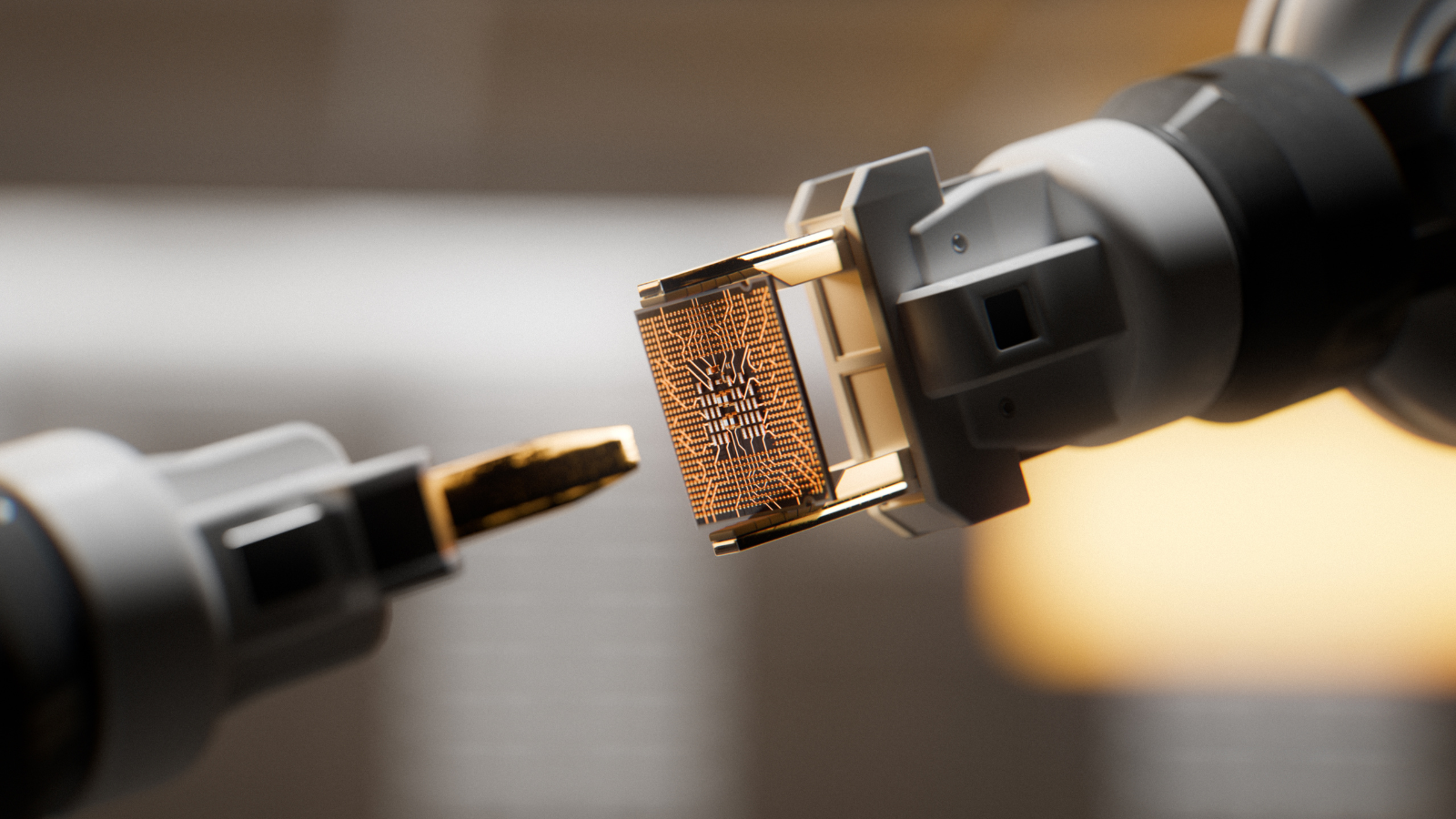

- India Signs the Pax Silica—A Counter to Pax Sinica?Commentary

On the last day of the India AI Impact Summit, India signed Pax Silica, a U.S.-led declaration seemingly focused on semiconductors. While India’s accession to the same was not entirely unforeseen, becoming a signatory nation this quickly was not on the cards either.

Konark Bhandari

- What We Know About Drone Use in the Iran WarCommentary

Two experts discuss how drone technology is shaping yet another conflict and what the United States can learn from Ukraine.

Steve Feldstein, Dara Massicot

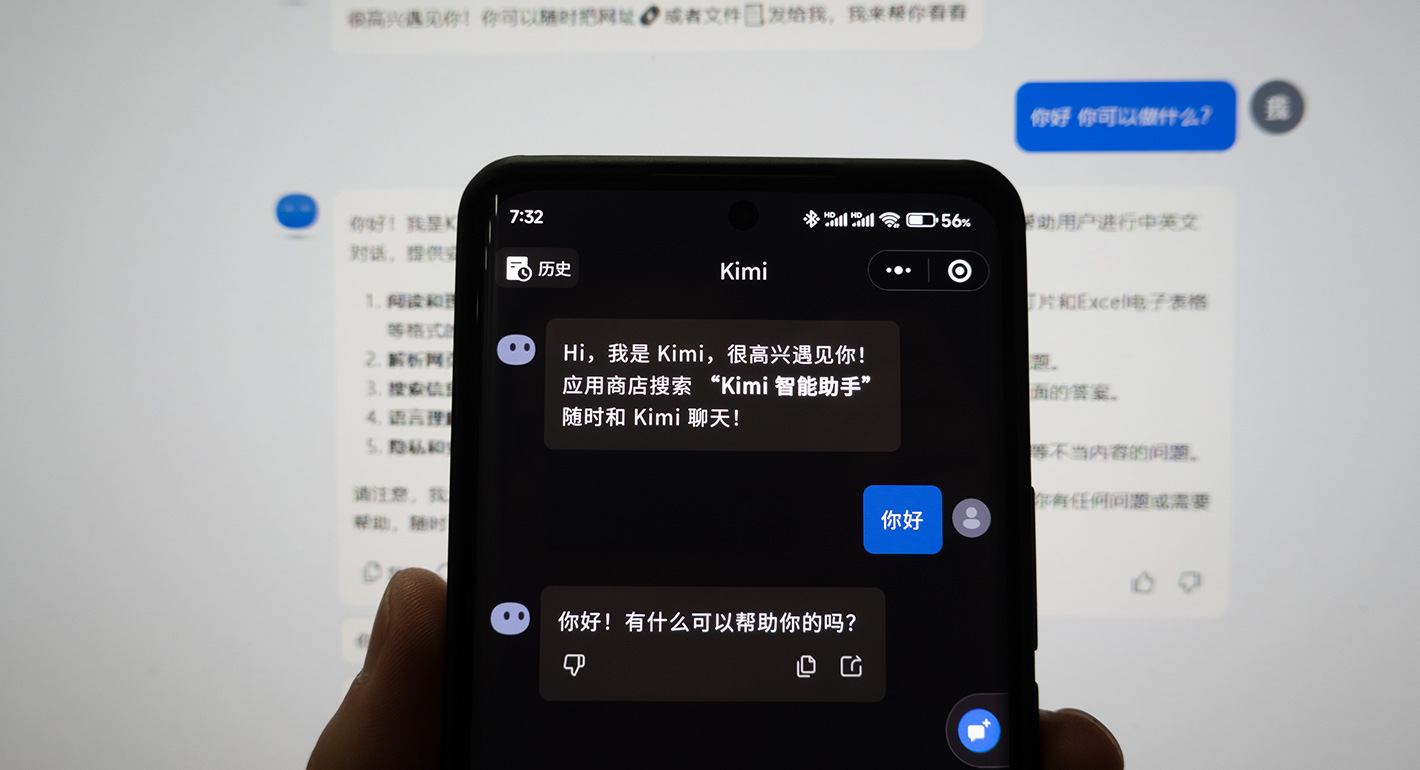

- China Is Worried About AI Companions. Here’s What It’s Doing About Them.Article

A new draft regulation on “anthropomorphic AI” could impose significant new compliance burdens on the makers of AI companions and chatbots.

Scott Singer, Matt Sheehan

- How Will the Loss of Starlink and Telegram Impact Russia’s Military?Commentary

With the blocking of Starlink terminals and restriction of access to Telegram, Russian troops in Ukraine have suffered a double technological blow. But neither service is irreplaceable.

Maria Kolomychenko

- How Europe Can Survive the AI Labor TransitionCommentary

Integrating AI into the workplace will increase job insecurity, fundamentally reshaping labor markets. To anticipate and manage this transition, the EU must build public trust, provide training infrastructures, and establish social protections.

Amanda Coakley